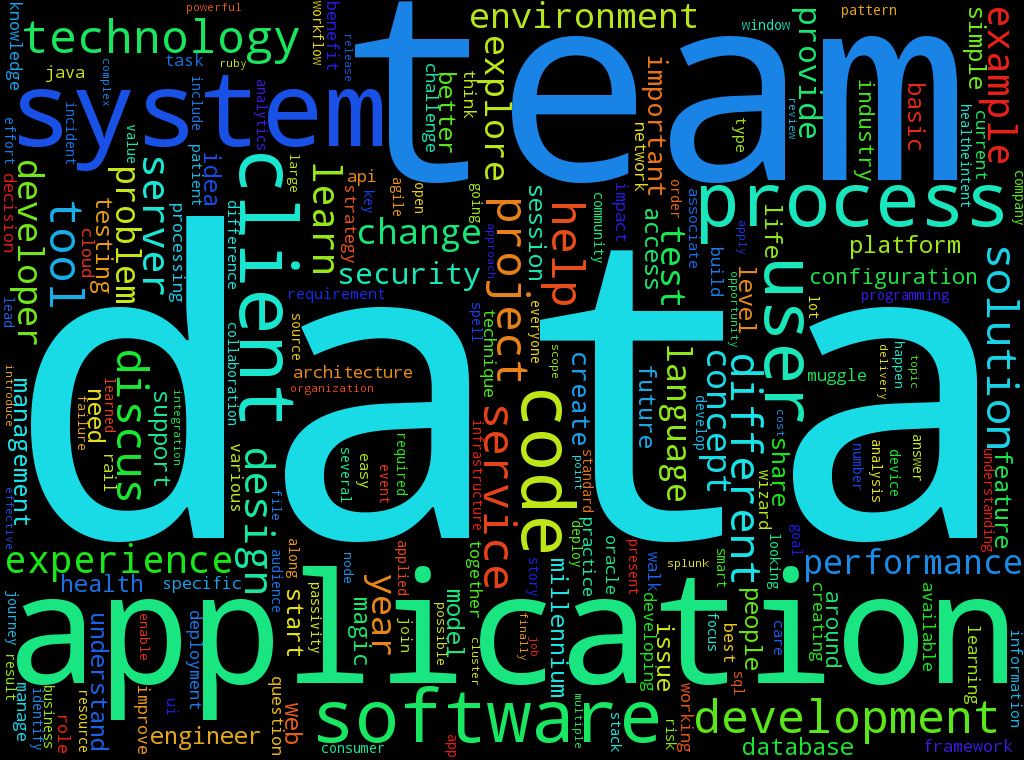

DevCon 2016 Word Cloud

Every year we hold an internal developers conference called DevCon. This year we had 295 submissions for talks, ranging from a deep technical dive into the inner workings of Kafka to a reflection on the power of office pranks. I wondered what, if anything, do these submissions have in common? Are there any common themes/topics being discussed? To find out I decided to visualize the talk submissions in a word cloud to create an easily understandable (and hopefully aesthetically pleasing) view of what topics were most common.

The first step was some basic data cleaning so I could get a good set of words to visualize. I used Python to read in the submissions into a single string, lowercase all words, and then remove all common contractions.

|

|

At this point I had 32,987 total words. A word cloud of this dataset was too noisy, predominated by common words like “the” and “talk”. In natural language processing these unwanted common words are called “stop words”. To remove them I made a list out of the submissions and then only kept words that were not in a list of common English words, supplemented by words specific to this data set, such as “Cerner” and “presentation”.

|

|

This left me with a list of 14,291 words and 4,264 distinct words. When visualized I noticed many similar words were taking the highest spots, such as “team” and “teams” or “technology” and “technologies”. To remove some of this noise I turned to lemmatization. Lemmatization is the process of finding a canonical representation of a word, i.e. its lemma. For example, the lemma for the words “runs” and “running” is run. I used the popular Python library Natural Language Toolkit for lemmatization.

|

|

This reduced the dataset to 3,834 distinct words. Now that the dataset was cleaned, common words filtered out, and similar words combined it was time to create the word cloud using a project called word_cloud.

|

|

Success! It is obvious what some of the most common themes are, such as “data” and “team”. Possible future steps for cleaning up the data would be grouping noun phrases, such as “software engineer” into single words or possibly removing common spelling mistakes using a spell checking library like PyEnchant.

You can checkout the full code on Github.